Table of contents

Open Table of contents

Intro

In React, if a state variable is an array and one element in the array needs to be changed, the array itself cannot be mutated directly (because it’s state).

Standard React practice is to create a new array from scratch and then replace the element that needs to be changed.

This prompted a few questions from me as there are multiple ways to do this in JS:

- what approach is the fastest if we want to clone an array and replace an element in it?

- does the answer to point 1 change with the JS engine being used, i.e. with the browser?

- does the answer to point 1 change with the array size (10, 1000, etc)?

A little experiment (or, better yet, benchmark) was is order, so I tested out a few different approaches (JSBench link is further down).

Note: since the entire array must be copied anyway, the index of the item to replace doesn’t have a large impact performance-wise except for A5 which uses splice().

Thus, I picked as index the middle of the array. It’s still O(N) but it’s not a splice starting from element 0 or from the last.

Rules of data collection

Browsers employed

The browsers used to test out the various approaches are the two heavyweights of desktop browsing: Chrome and Firefox. To be exact:

- Chrome Version 115.0.5790.110 (Official Build) (64-bit) with no extensions;

- Mozilla Firefox 115.0.2 (64-bit) with no extensions.

Chrome’s JS engine is called “V8”, while Firefox’s is called “SpiderMonkey”.

Array sizes

Each approach is run against three different array sizes (in terms of elements): 10, 1000, 100K (100000).

Averages

Datapoints are in the shape of “this approach allows X operations/second”, i.e. speed of execution for a certain approach.

Each datapoint is calculated as the average of 3 runs, with the formula 0.333*X + 0.333*Y + 0.333*Z (X, Y, Z being the run values).

“How come only 3 runs?”

The reason is that Chrome is rather slow with JSBench, taking 5-7m for my test environment to run, while Firefox takes ~1m instead.

9 runs on Chrome (3 per array size, with sizes being 10, 1000, 100K) would take about an hour, and that’s just the raw runs, without considering the Excel part!

Therefore, in the interest of time, the average is calculated on 3 runs.

Most significant digit

In an effort to stay faithful to measurement principles (I did do two exams on the matter, I can’t simply pretend they don’t exist), final datapoints take the most significant digit of the source datapoints into account, i.e. they won’t be “more precise” numbers.

To give an example:

- three runs give datapoints 4400, 4500, 4500

- raw average is 4466.666…

- actual average, taking the most significant digit (the second 4 from the left) into account, is 4500

Error margin

Each final datapoint shown in this post has |error margin| < 4.07% (i.e. at worst +- 4.07%).

Why? The worst error margin given by JSBench was 4.07% and the averages calculated in Excel use formula c*X + c*Y +c*Z where c = 0.333, thus:

|error margin| < 0.333*4.07% + 0.333*4.07% + 0.333*4.07% = 4.07%.

To be fair, most error margins I witnessed were, taking out the sign, < 3%, so error is at least 1% higher than that, but I didn’t write it together with the datapoints for brevity’s sake.

Code

This is the test environment that I wrote on JSBench.me (setup plus the various approaches, in the same order of appearance as on JSBench).

Setup

const item = {

prop1: "xyz",

prop2: 5,

prop3: false,

};

const arr_size = 10;

const start_array = Array(arr_size).fill(item);

const new_item = {

prop1: "abc",

prop2: 12,

prop3: true,

};

const new_item_index = start_array.length / 2 - 1;A1: C-style for-loop

const new_array = Array();

for (let i = 0; i < start_array.length; ++i) {

if (i === new_item_index) {

new_array.push(new_item);

} else {

new_array.push(item);

}

}A2: Array.prototype.map()

const new_array = start_array.map((item, index) => {

if (index === new_item_index) {

return new_item;

} else {

return item;

}

});A3: Array.prototype.forEach() with pre-allocated Array

const new_array = Array(arr_size);

new_array.forEach((_, index) => {

if (index === new_item_index) {

new_array[i] = new_item;

} else {

new_array[i] = start_array[i];

}

});A4: C-style for-loop with pre-allocated Array

const new_array = Array(arr_size);

for (let i = 0; i < start_array.length; ++i) {

if (i === new_item_index) {

new_array[i] = new_item;

} else {

new_array[i] = item;

}

}A5: Array.prototype.slice() and .splice()

const new_array = start_array.slice();

new_array.splice(new_item_index, 1, new_item);A6: Array.prototype.slice() and replace

const new_array = start_array.slice();

new_array[new_item_index] = new_item;Results

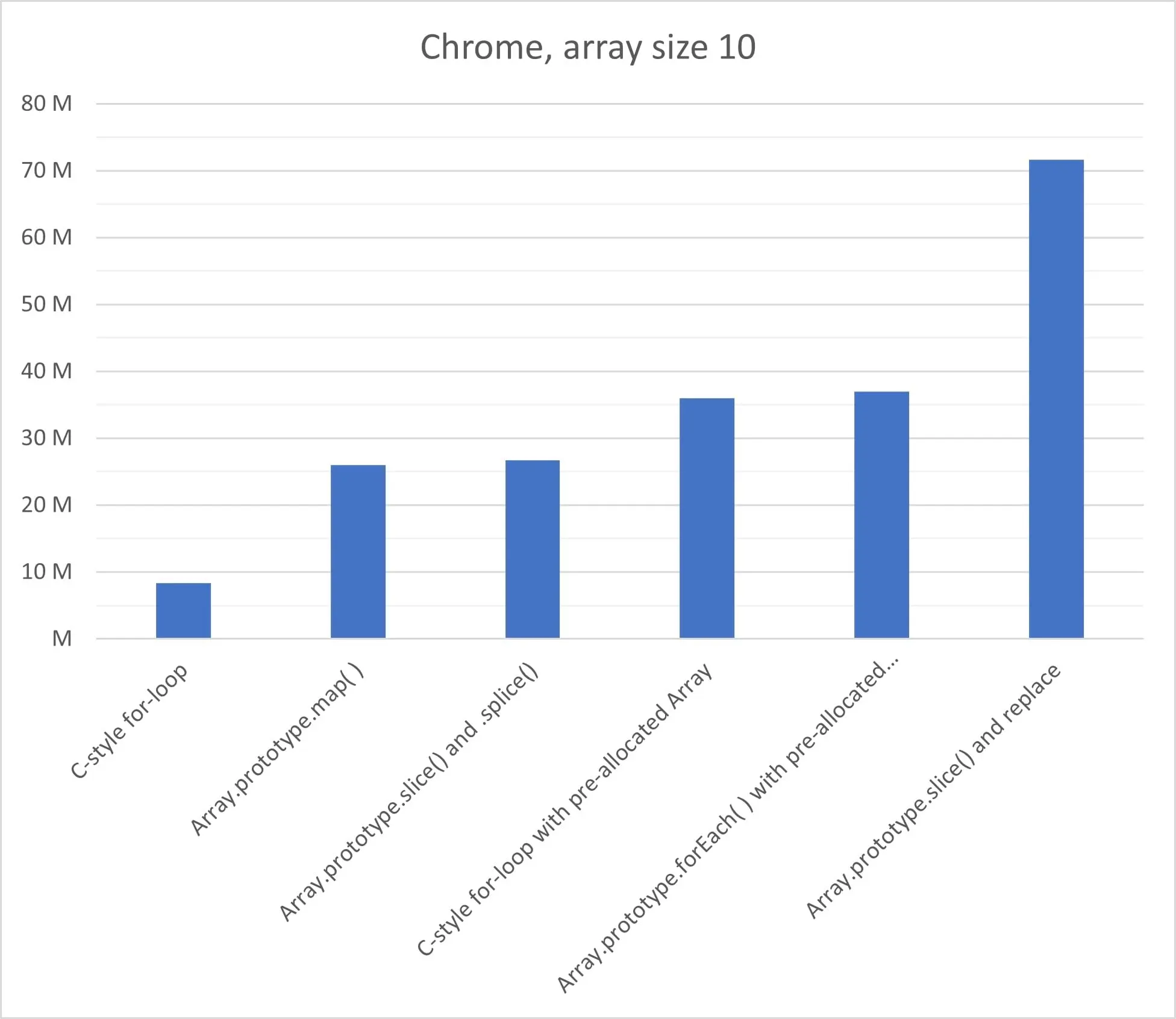

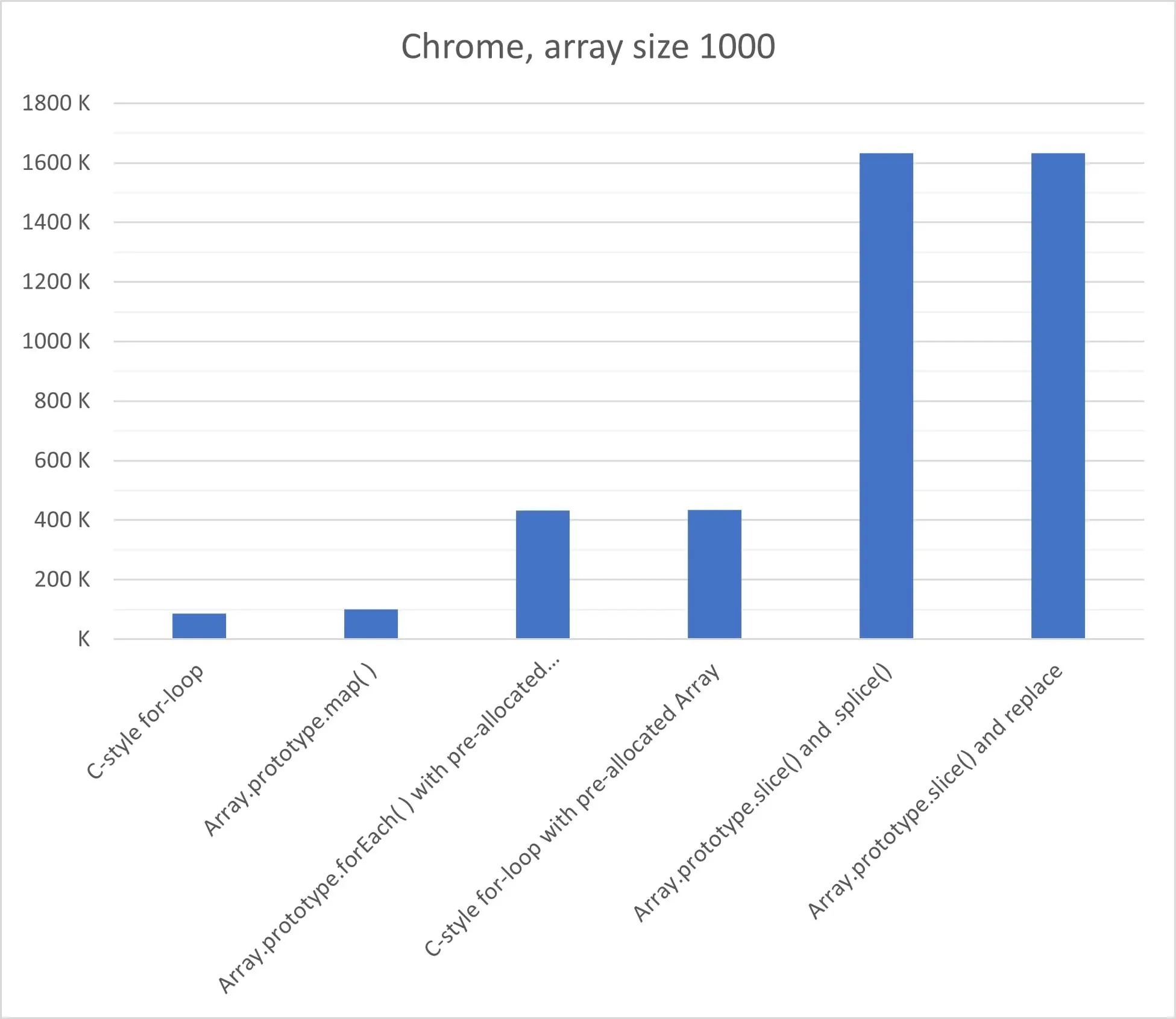

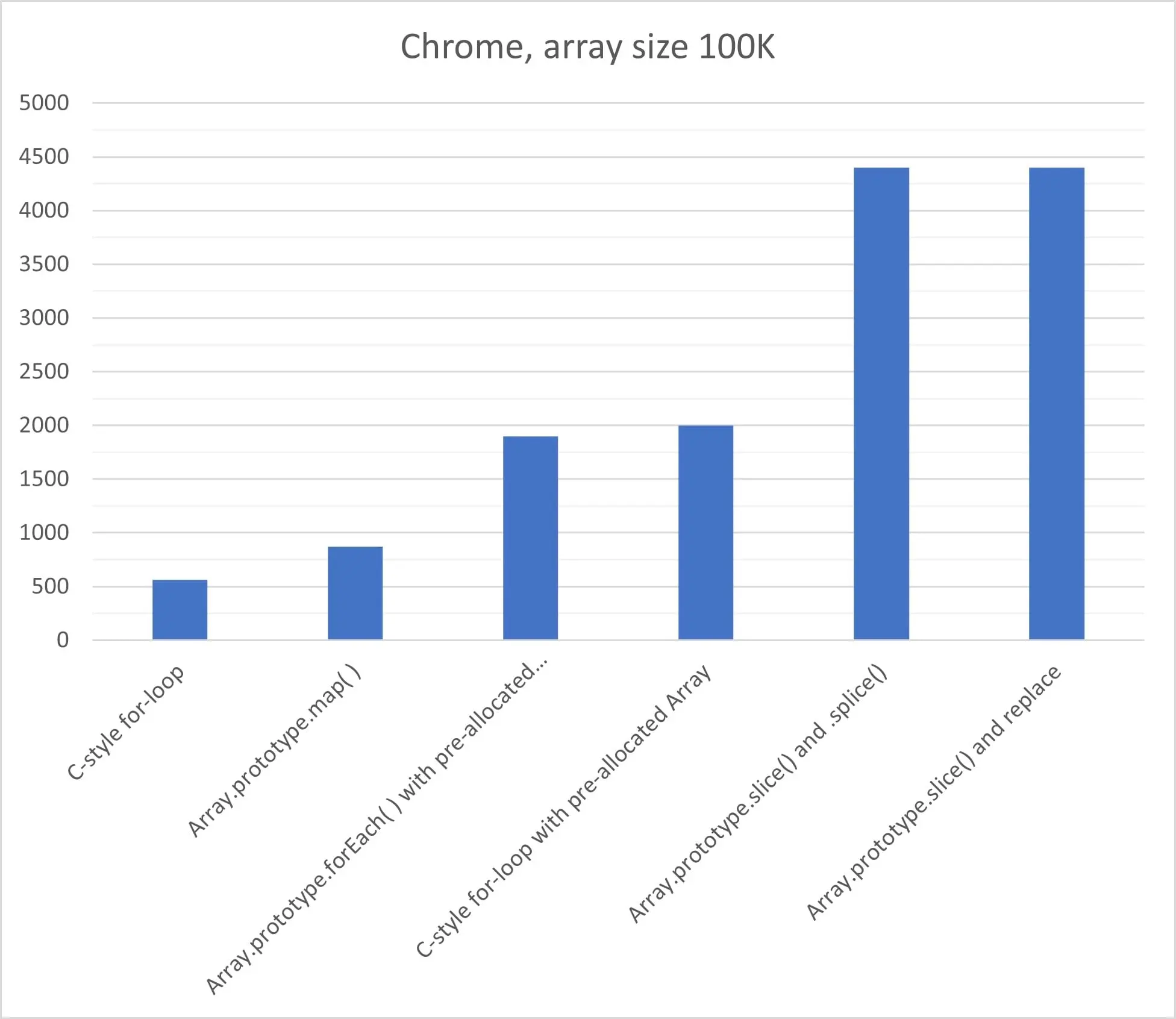

Google Chrome

Charts first:

Chrome has shown consistency in its results: A6 “Array.prototype.slice() and replace” is the fastest approach at small sizes and wins ex-aequo with A5 “Array.prototype.slice() and .splice()” for middle and big sizes.

The overhead of calling Array.prototype.splice() is clearly visible for small sizes but becomes negligible at bigger sizes.

Lastly, I was rather surprised by how poorly the classic A1 “C-style for-loop” fared. It’s a staple in C++ but apparently not in JS.

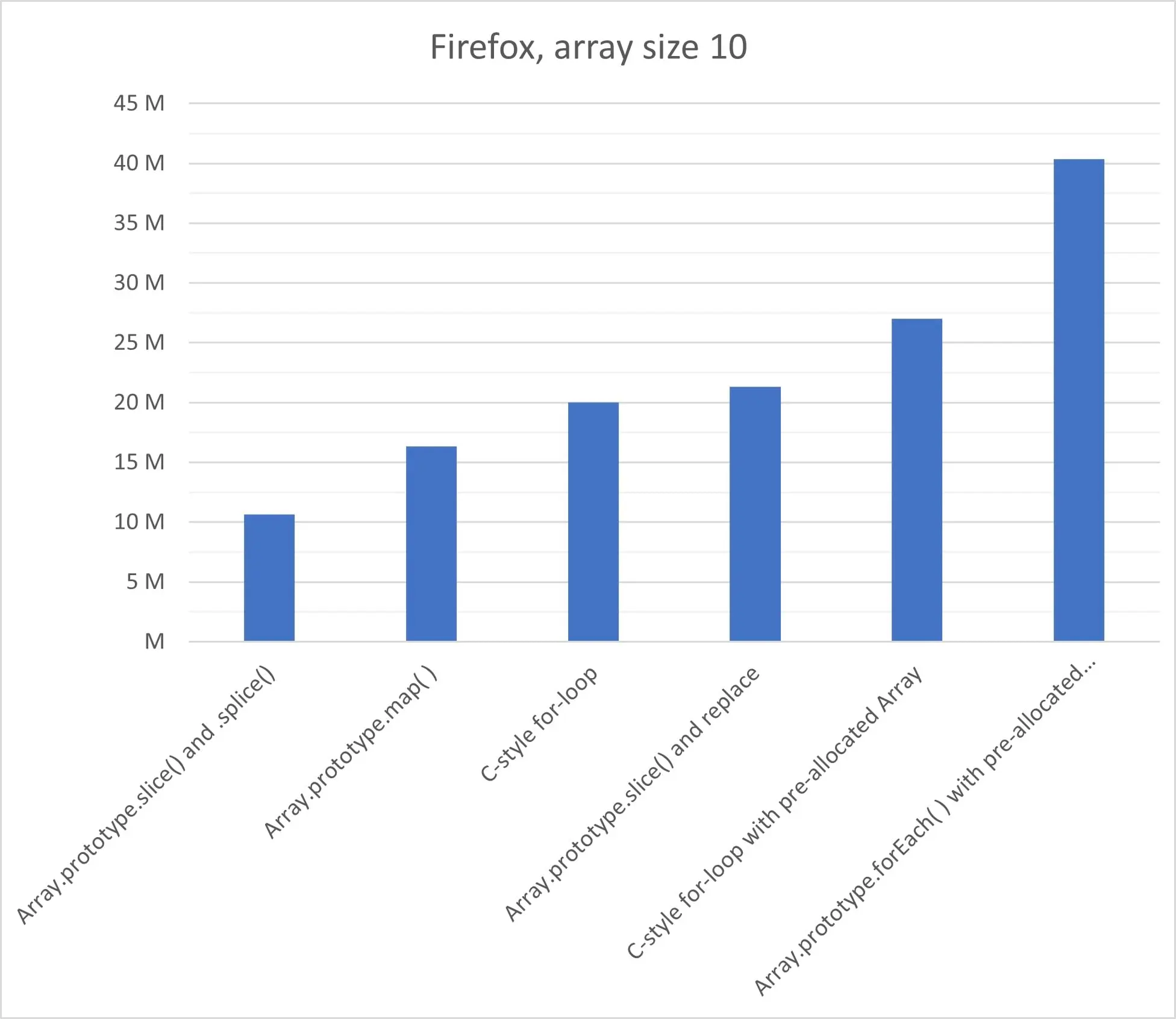

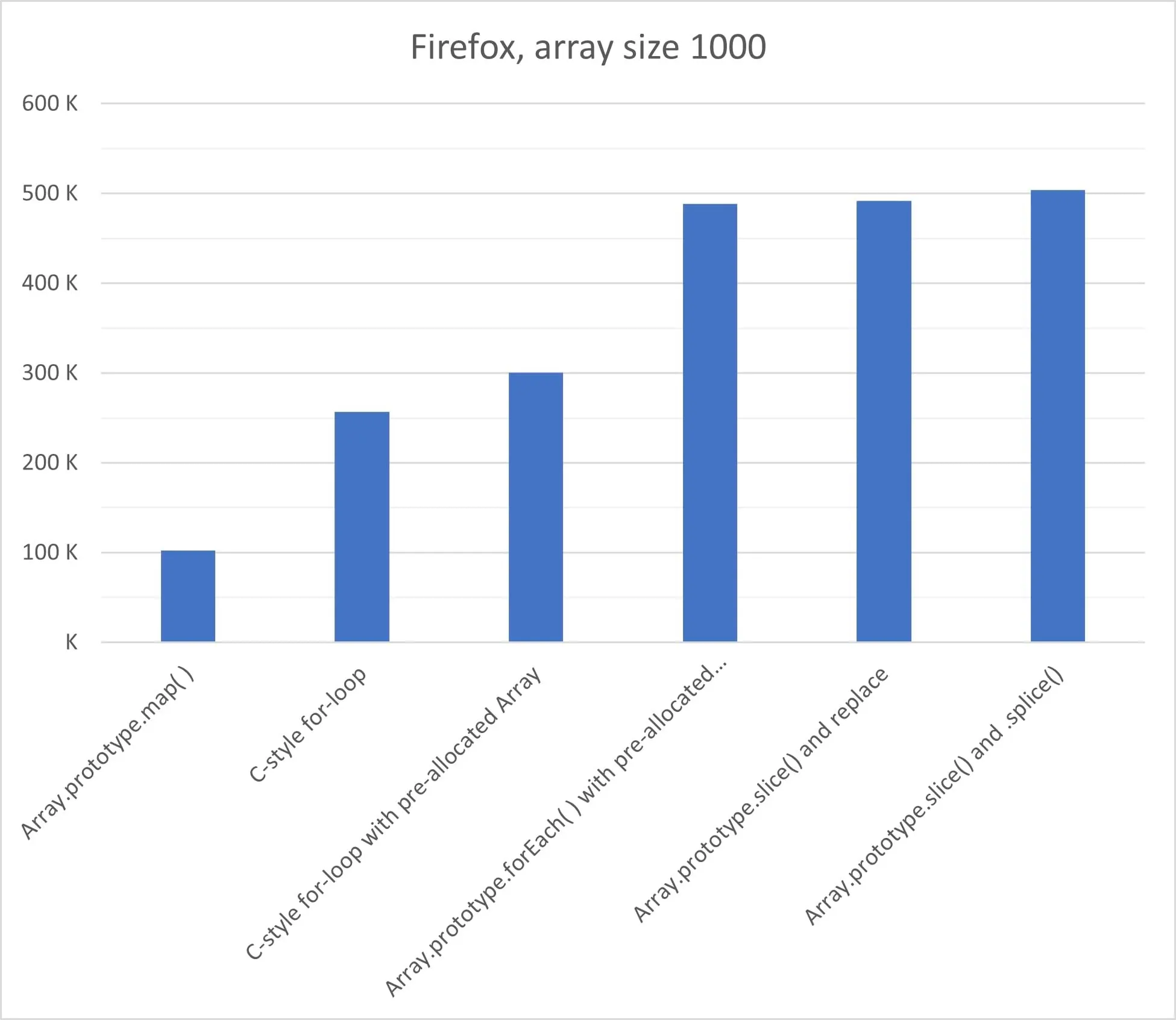

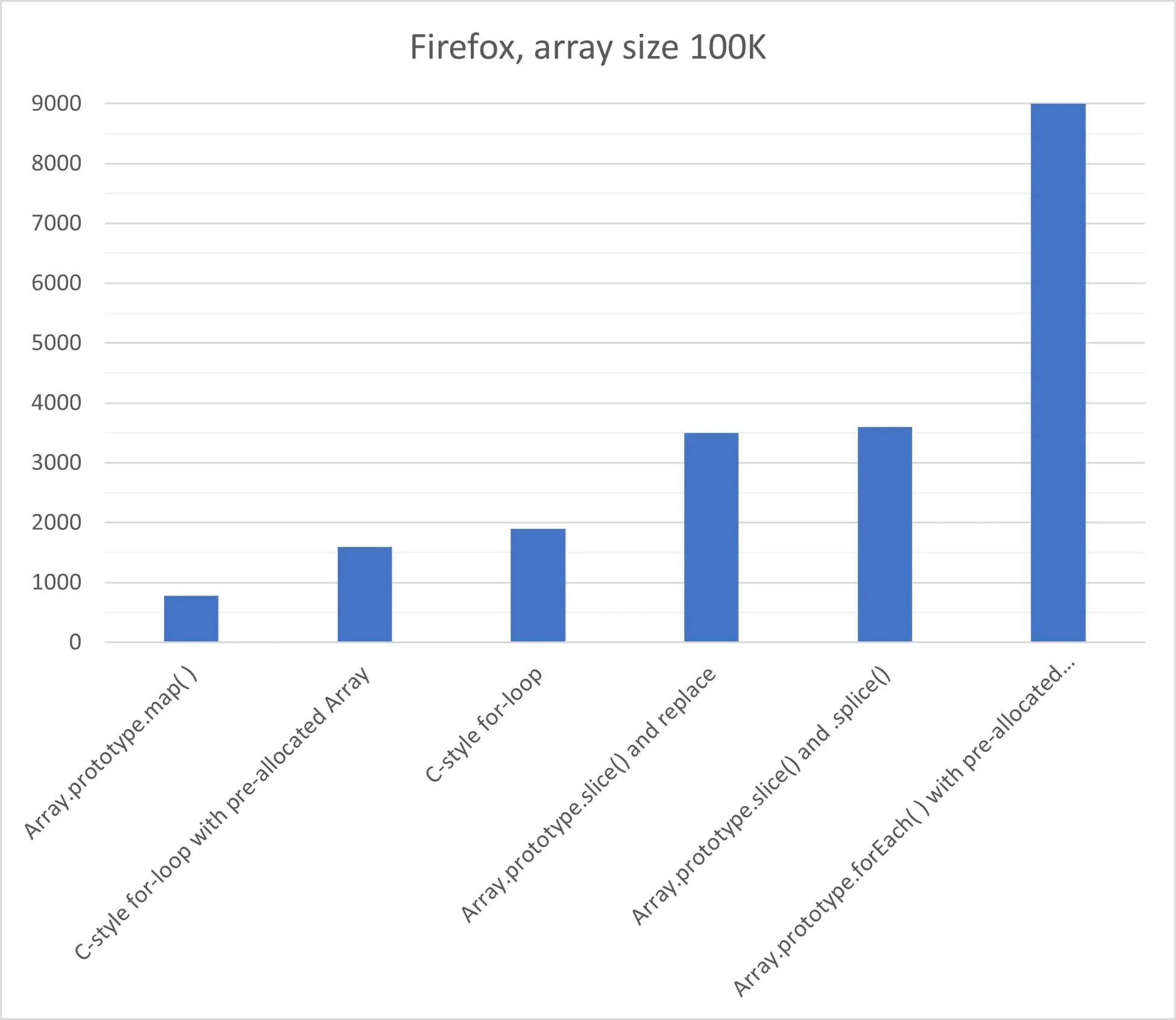

Mozilla Firefox

Firefox had instead some twists and turns in store!

At small and big sizes, A3 “Array.prototype.forEach() with pre-allocated Array” takes the crown and with quite the margin.

At small and big sizes, A3 “Array.prototype.forEach() with pre-allocated Array” takes the crown and with quite the margin.

However, at middle sizes the winner is A5 “Array.prototype.slice() and .splice()” in a close battle with A6 and A3, with A3 being within 3.3% of A5, enough for their error margins to overlap and thus for A5->A6->A3 not to be a conclusive podium.

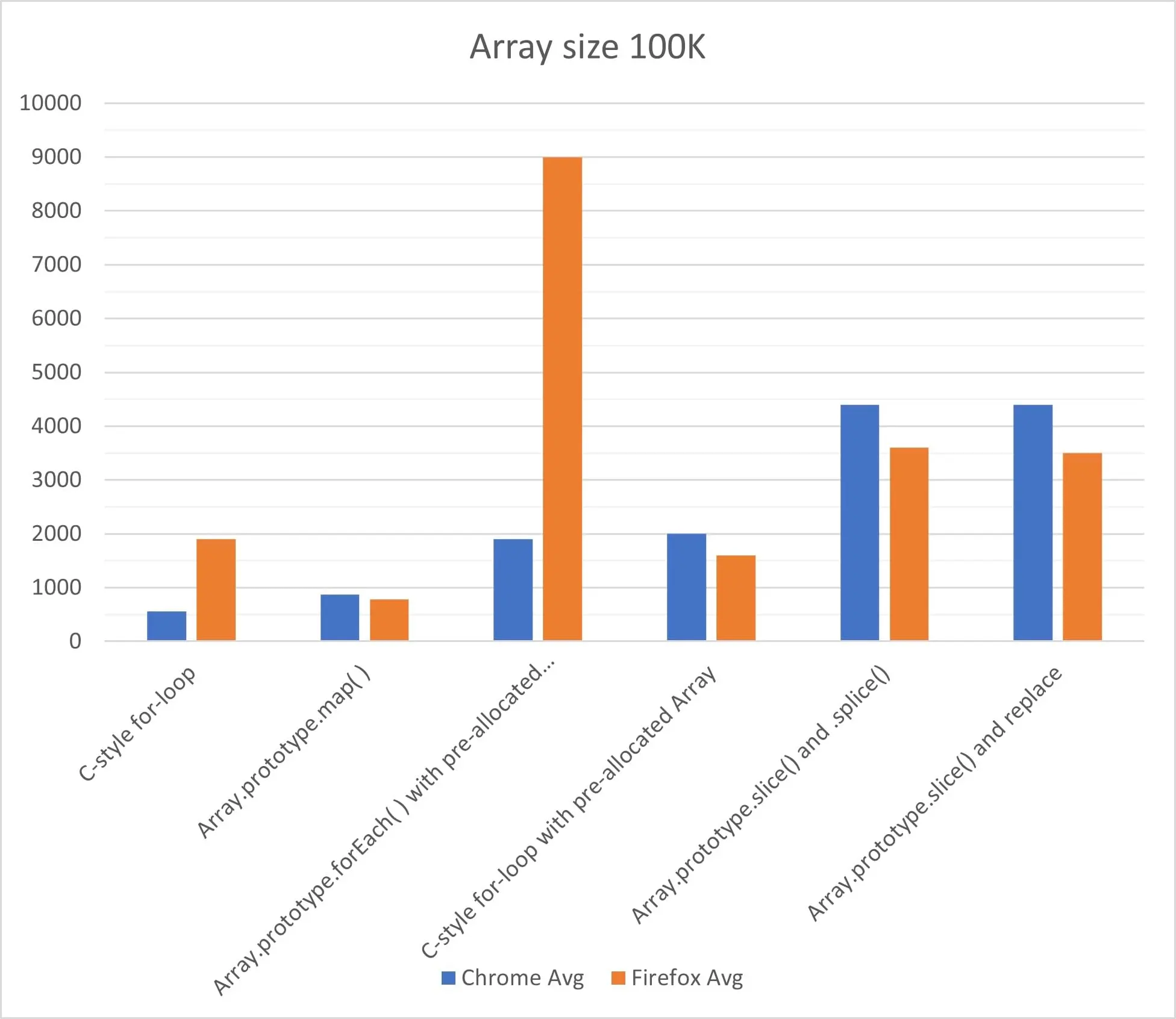

Browsers compared

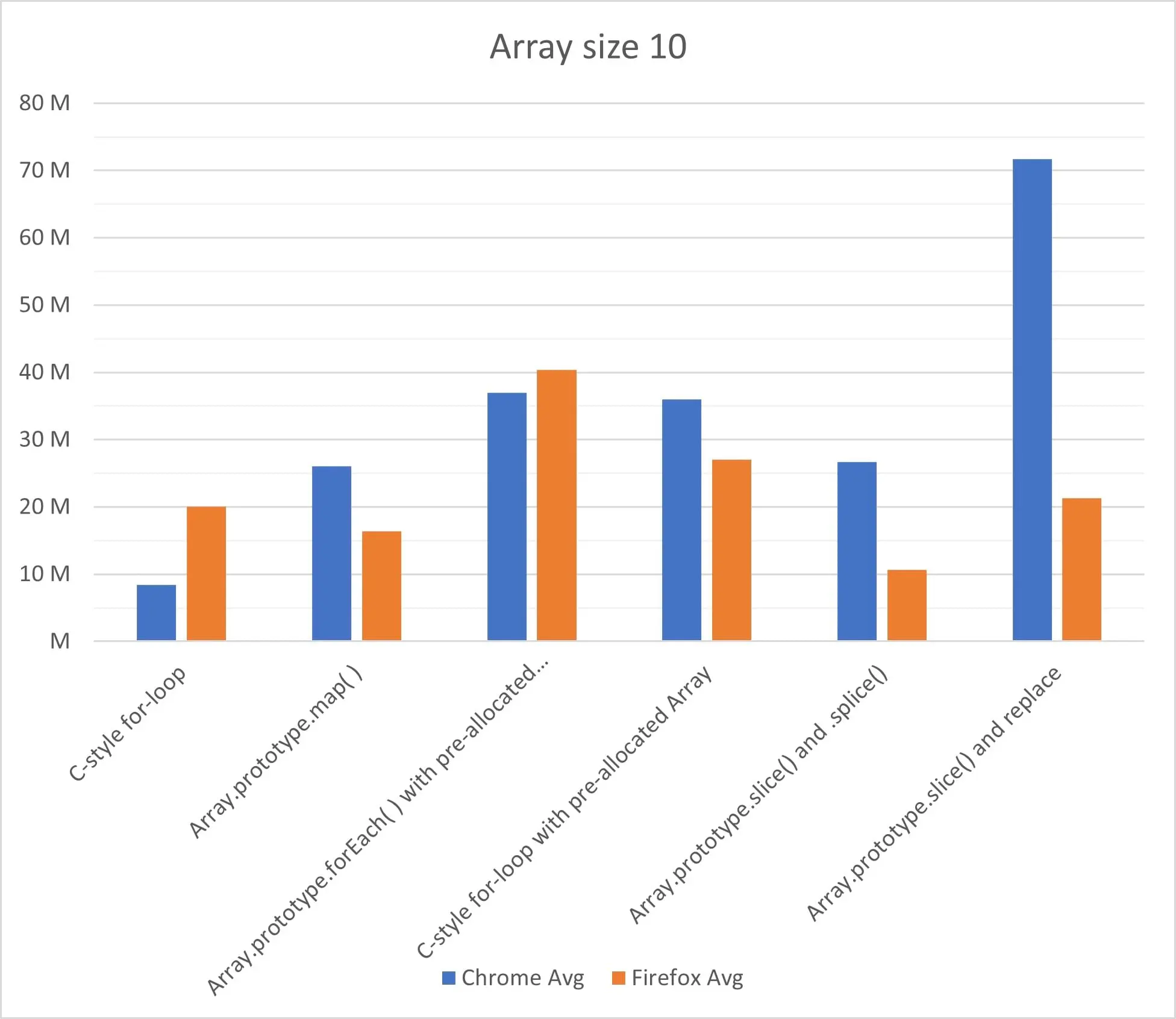

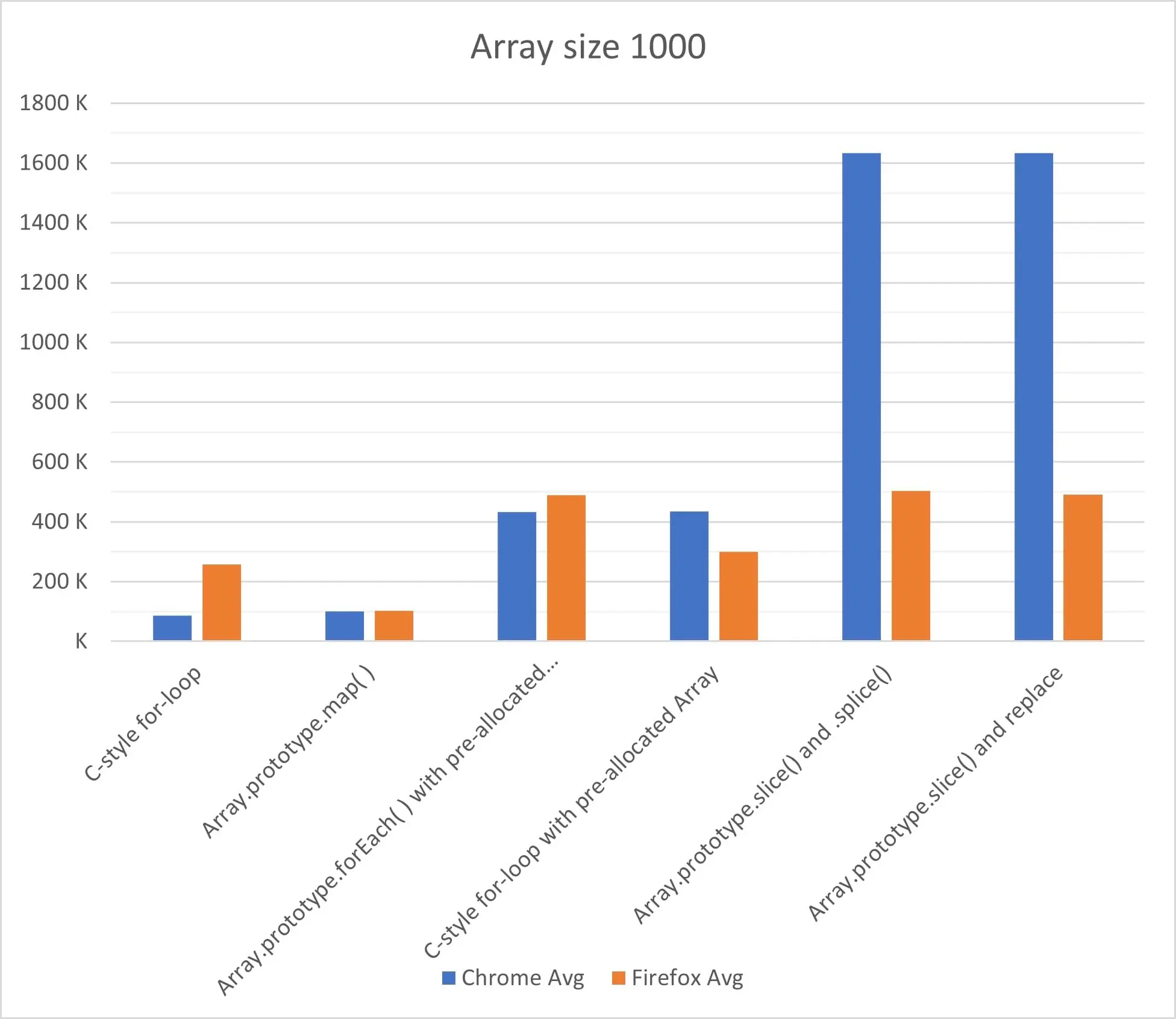

So far, the analysis has looked at results from a per-browser perspective, i.e. “what’s fastest on browser X?“.

This section instead compares the performance of the two browsers on the six approaches, them being benchmarks in a way.

What emerges from this is that Firefox is much slower than Chrome on small and middle array sizes, with peak performance going from ~1.8x at size 10 to ~3.2x at size 1000.

However, Firefox shines on large sizes, with peak performance ~2x that of Chrome.

What emerges from this is that Firefox is much slower than Chrome on small and middle array sizes, with peak performance going from ~1.8x at size 10 to ~3.2x at size 1000.

However, Firefox shines on large sizes, with peak performance ~2x that of Chrome.

This means there’s an inflection point in terms of array size where Chrome starts losing performance to Firefox instead of gaining it, until they reach performance parity at size = N and then Firefox surpasses Chrome from that point on.

Looking more closely, it can be observed that Firefox is slower than Chrome on all approaches except A1 “C-style for-loop” (nothing to call home for, unfortunately) and, more importantly, A3 “Array.prototype.forEach() with pre-allocated Array” (performing much better than Chrome at large sizes, see the ~2x peak performance mentioned earlier).

Caveats

.slice() makes shallow copies, it doesn’t go further than one level down, so its use in cloning an array that is React state to then modify it should only be considered if the array elements are simple types (number/string/boolean) or objects with depth 1, i.e. {a: 5, b: "boo", c: false}.

The results presented in this post do not apply anymore if the array is instead made of deeper objects, like for example:

{

ticker: 'AAPL',

currency: 'eur',

prices: {

daily: {

open: 500,

close: 600,

},

weekly: {

open: 480,

close: 570,

},

}

}which instead of .slice() (the winner in Chrome) require a deep-copying solution.

But that’s a story for another blog post.